DexCanvas: Bridging Human Demonstrations and Robot Learning for Dexterous Manipulation

DexRobot Co. Ltd. · University of Michigan · Shanghai Jiao Tong University · Chongqing University ·

East China University of Science and Technology

*Equal contribution

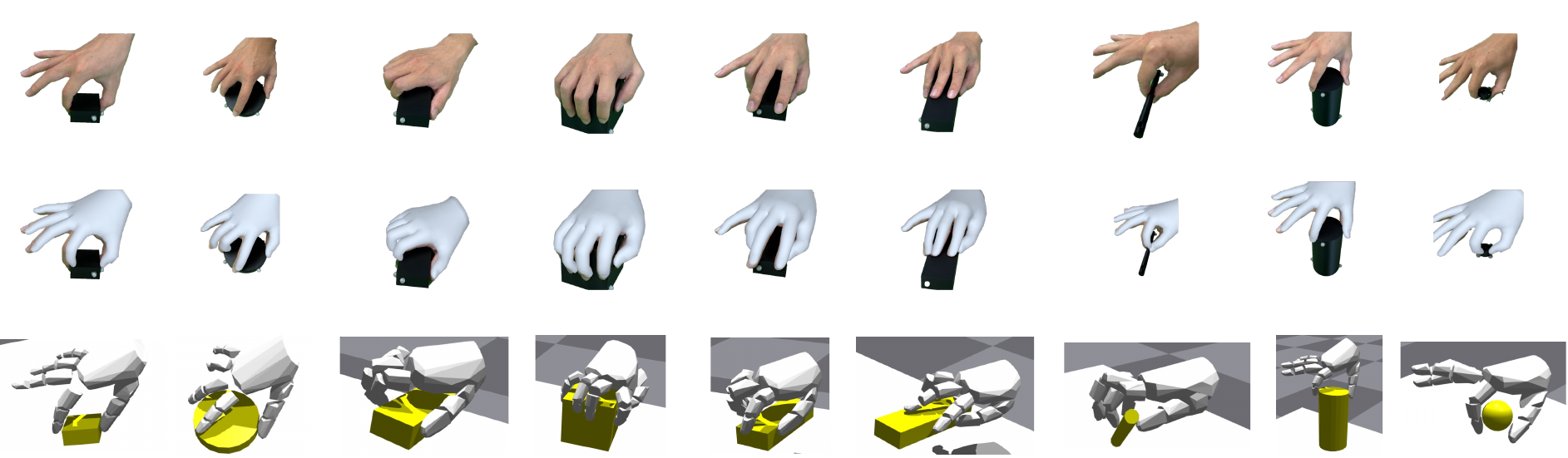

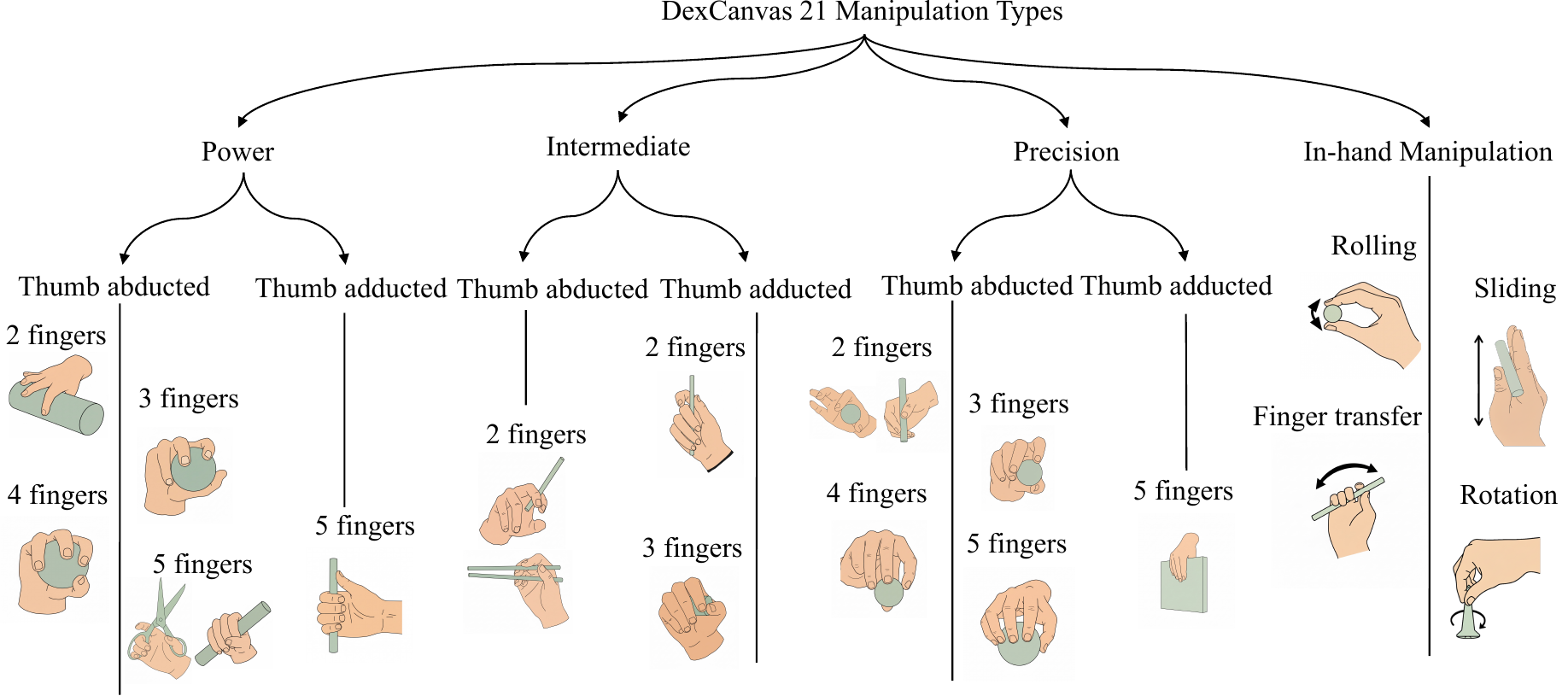

A large-scale hybrid real-synthetic dataset containing 7,000 hours of human manipulation data seeded from 70 hours of real demonstrations, organized across 21 fundamental manipulation types with physics-validated contact forces.